Bishop Hill

Bishop Hill Models, data and the Arctic

Mar 10, 2012

Mar 10, 2012  Climate: Mosher

Climate: Mosher  Climate: Surface

Climate: Surface While I was at the Met Office the other day, I had a very interesting presentation from Jeff Knight, who IIRC runs the season-to-decadal forecasting unit there. Jeff's talk included discussion of a paper that Lucia had looked here. Lucia's comments are, as always, very interesting and address a range of concerns that I'm not going to touch on here. The paper itself is here.

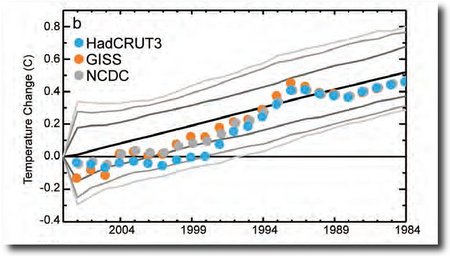

The headline image of Jeff's talk was what the Met Office refers to as "the Celery Stick" graph, which assesses the range of model predictions against data:

ENSO-adjusted global mean temperature changes to 2008 as a function of starting year for HadCRUT3, GISS dataset (Hansen et al. 2001) and the NCDC dataset (Smith et al. 2008) (dots). Mean changes over all similar-length periods in the twenty-first century climate model simulations are shown in black, bracketed by the 70%, 90%, and 95% intervals of the range of trends (gray curves).

ENSO-adjusted global mean temperature changes to 2008 as a function of starting year for HadCRUT3, GISS dataset (Hansen et al. 2001) and the NCDC dataset (Smith et al. 2008) (dots). Mean changes over all similar-length periods in the twenty-first century climate model simulations are shown in black, bracketed by the 70%, 90%, and 95% intervals of the range of trends (gray curves).

As can be seen from the graph, CRUTEM is on the cusp of falling outside the envelope of the projections of the models, although this was surprisingly of little concern to the Met Office. As we saw a few weeks ago, the new version of CRUTEM will be issued soon, and will have an increased warming trend, which is apparently the result of a reassessment of warming in the Arctic. This involves a shift from the "admit our ignorance" approach used now to a new one incorporating a GISS-like extrapolation. The enhanced warming will shift CRUTEM back towards the centre of the envelope of the models.

This bothers me. It seems to me that for assessing model peformance, you should only compare models to data, not models to data-plus-extrapolation. If we don't know anything about the Arctic, then we should presumably test models versus data only for those regions of the planet outside the Arctic.

Bishop Hill

Bishop Hill

Richard Betts in the comments advises that the new temperature series will not involve extrapolation. I'm scratching my head here, because I'm sure we discussed this while I was down in Exeter, but I'm going to defer to Richard.

Reader Comments (68)

Mar 10, 2012 at 12:18 PM dogsgotnonose

Please don't be so hard on Richard Betts.

Anyway, he's not listed as one of the authors of the paper, so why kick his shins for any shortcomings it might have?

michael hart wrote:

"I was looking for some data on atmospheric CO2 concentrations recently, and also wondered why some of them only went up to 2008. Does anybody have a link for up to date figures?"

See this page which has links to monthly and annual data. The monthly file has both measured and seasonally-adjusted values.

A while back Richard Betts put forward the following MO page for information as to why the MO forecasts had proved not to be where they should be:-

“Global-average annual temperature forecast”

The page carries the following statements (my bold):-

Some observational estimates take more account of the Arctic warming than Met Office - CRU, hence the need to compare our global forecast temperatures to the range of global records.

the predictions lie on average near the centre of the observational estimates.

Please read it all, just to make sure about context:-

http://www.metoffice.gov.uk/research/climate/seasonal-to-decadal/long-range/glob-aver-annual-temp-fc

And when you have and you know what scientific standing observational estimates have please let me know.

Also at the bottom of the page is:-

Figure 3: The difference in coverage of land surface temperature data between 1990-1999 and 2005-2010. Blue squares are common coverage. Orange squares are areas where we had data in the 90s but don't have now and the few pale green areas are those where we have data now, but didn't in the 90s. The largest difference is over Canada.

Why do the MO no longer have the Canadian land surface temperature data? I am not aware of any of the stations being closed? If they have been could somebody please point me in the right direction?

I wonder who decided to exclude the Canadian data and why? Also who now wants it back and why?

Richard Betts, I recognise that I could be reading this wrongly, if so please let me know where I am going wrong.

Richard Lindzen summed it up very well when he told the House of Commons that while we can't predict the future, Climate Science can't predict the past.

Bishop Hill writes:

'It seems to me that for assessing model peformance, you should only compare models to data, not models to data-plus-extrapolation. If we don't know anything about the Arctic, then we should presumably test models versus data only for those regions of the planet outside the Arctic.'

That seems to me fundamentally misguided. It seems to rely on the idea that there are some measurements that do not rely on extrapolation. But I don't think there are any such observations in climate science or anywhere else in science. Certainly temperatures of the globe-outside-the-artic rely on many extrapolations.

It seems to me that the correct approach is estimate, as best as possible, the error on measurements. How accurate are they? Some will will be better some will be worse. None will be perfect. The idea of working only with 'data' seems to be motivated by the idea that these are somehow perfect, uncontaminated, objective. But this is not so. There is no 'perfect' data that can easily be isolated from 'imperfect', 'data-plus-extrapolation'.

We need to make our best measurements and our best estimates of their accuracy. The process of estimating accuracy will be valuable. If it shows that the measurements are of little or no use for understanding what is happening it should at least indicate how measurements can be improved. Otherwise it will show how much confidence can be placed in the measurements. This is critical even in the case of mythical data-that-does-not-involve-extrapolations.

(In some specific cases there may be two sets of data one of which is much worse than the other. I suspect that is not the case with Arctic temperatures. The quality of global temperature data is generally highly varible. But even if it is true in this case, I still argue my overall methodological point is sound. It might be convenient to agree on a quality threshold below which data should not be used, but this is not a division into perfect and imperfect. It is a pragmatic decision based on an underlying analysis.)

I have to say that the logic here which bothers me is something that I see recurring again and again on this site. More than anything it is this clause: 'If we don't know anything about the Arctic...' The logic here is that we don't know for certain about Arctic temperatures therefore we don't know anything. That is the leap I cannot get my head around. To me nothing in science is absolutely certain. Indeed, the genius of science is all about how we can gain some knowledge precisely while understanding that nothing is certain. But here is the move from the need for extrapolation to 'we don't know anything'.

I suspect this attitude is a short hand, generated by background assumptions from a wider political debate. I presume it might mean something more like 'not good enought to base important policy decisions on'. But I'm still battling to make sense of this attitude.

I would be a lot happier (have anything but zero confidence) that the Met Office could predict the future, if it were prepared to admit it had failed to predict the future in the past.

Only when people admit they have failed, do they look for the real problems and admit their limitations.

And when you are honest enough to admit your failings, when you do claim success, people know you are honestly claiming success and not just manipulating the models to post-predict the past.

Unfortunately, this dishonesty seems to be institutionalised in the Met Office. You can't sack someone for a bad prediction, if that was genuinely the best they were able to do. But you can sack someone for hiding the fact they can't make predictions.

And only when they admit their failings will they have the humility and insight to make believable predictions.

JK "The idea of working only with 'data' seems to be motivated by the idea that these are somehow perfect, uncontaminated, objective."

I think the point Bishop Hill is making is that if you have two predictions (rise + "others") and you claim that your prediction of the rise was correct because (your prediction of) the "other" was off. All you are doing is fraudulently claiming an accurate prediction by fraudulently assigning all the error to "others" allowing you to FRAUDULENTLY claim that your "main" prediction was right.

Let me use an example:

you bump into a person on the street. Your wallet falls on the path. They hand it back to you. You put it in your pocket only to find later that the money and cards are missing.

In court, they claim they were honest because they gave you back the wallet. They claim this shows that it could not have been them than used their credit cards even though there is strong circumstantial evidence.

OK, if they had handed in the wallet they had found to a police station, that would show they were honest. But the handing back of the wallet was clearly a ploy to hide the bigger crime. You have to look at the whole picture. What was the net affect, not what was the isolated action.

Likewise all these "others" which are constantly being post-manipulated to prove that the predictions were right can't be taken out of the picture. It is all part of one prediction.

Either they can predict the climate ... or as we all know THEY CAN'T

The models will never be "right" because they wrongly assume radiation from the atmosphere transfers thermal energy to a warmer surface. All it can do is slow the radiative cooling rate, but not the rate of cooling due to evaporation, diffusion and other processes. These processes then tend to increase their transfer rates to compensate.

Nor do we see in the models any mention of the upwelling backradiation of incident solar IR radiation captured by water vapour, carbon dioxide etc..

I have spent some 1,000 hours or more researching this and writing a comprehensive summary of a wide range of reasons why carbon dioxide has no warming effect and probably a slight net cooling effect.

At least six scientists have now read the 6,600 word paper, including three official reviewers. All are, frankly, somewhat excited about it I gather, and plan to translate it to German as well. It will be available within 30 hours at http://principia-scientific.org/

I am more than willing to discuss any matter raised therein, provided people discuss the physics, rather the personalities or whatever they may think or wish to imply in any effort to discredit the material on such grounds.

Paul Homewood reports that GHCN adjustments produce an artificial warming right across the arctic, by cooling the past (a warm period around 1940).

This story has now been picked up by WUWT.

Mike Haseler writes:

'Either they can predict the climate ... or as we all know THEY CAN'T'

Yes, this is exactly what I am struggling with. For me scientific questions are not of the form 'can we predict x?' The answer is boring - no. The form of a scientific question is 'how well can we predict x?' Answering this requires numbers and measurements. It is about how close? What is the distance? What is the accuracy?

Is your assertion 'cannot predict' a shorthand for 'cannot predict well enough'? Does it somehow derive from Popper? (I think Popper's idea that theories should be falsifiable is a useful rule of thumb but it falls apart if you try to make it rigorous. But that is not much different to all attempts to rigidly codify 'the scientific method'.)

And what would it even mean to 'predict the climate' anyway? A prediction of global mean temperature would be one thing (although this would never be perfect). But that would be a very, very long way from 'the climate'. There are many dimensions to the climate. We can predict them with differing accuracies, in a way that cannot be summarised in a single binary.

If you want to assert that you don't want to pay a carbon tax then that is a fair enough binary yes / no answer. What is appropriate in politics is not appropriate in science, though.

Hi Bish

There is a very important correction that I'd like to ask you to make to your post! (I've only just seen it, due to being busy all weekend.)

I think you have mis-remembered the detail of Jeff's talk, when he talked about the update of the dataset from HadCRUT3 to HadCRUT4. The new dataset will not use extrapolation across the Arctic!

Instead, the new dataset will include additional land stations for which we did not previously have data. Some of these are in high-latitude Canada and Siberia, which have shown warming in recent times. This is significant enough to affect the recent global temperature estimates, and will also result in a smaller difference between the obs and the model projection use in the Knight et al paper linked to in your post. When the dataset has been published, Jeff and colleagues will quantify this effect.

So, the differences in the new dataset are simply due to using more complete data! The concerns of readers in the above comments about extrapolation are therefore unfounded - we are not doing it!

Please could you update your post to clear up this misunderstanding?

Thanks very much!

Cheers

Richard

Hi again Bish

Thanks very much for the correction. The conversations in Exeter were quite wide-ranging, so maybe the group ended up talking at cross-purposes at some point - I can send you the paper if you are interested (I think it was already made available to IPCC WG1 FOD reviewers), and when it's published we can post a link here.

Thanks again for updating the post!

All the best,

Richard

Richard B - thanks for clarifying. I may be getting mixed up here But I thought that Hadcrut4 was going to show a warmer world thanks to adjusting the ocean temperatures up as a consequence of the ARGO data. I suppose the new Arctic stations are just for good measure?

Sorry, I can't take any of these land surface datasets seriously. Can you justify or explain the changes NOAA have made to the GCHNv3 dataset and GISS likewise to the historic records for Greenland, Iceland, Norway and Russia? Odd that Tiree, Belfast, Aberdeen~Dyce have not been adjusted but Lerwick has. Maybe that is because Lerwick is just above 64 degrees North, so Hansen can use it to extrapolate and exaggerate his Arctic temperatures.

lapogus, the ocean temperature (SST) part of Hadcrut4 (which is called, confusingly, HADSST3) does not use ARGO data. It uses data from ICOADS, which is a combination of measurements from ships and from buoys.

You may not be surprised to learn that

"new adjustments have been developed to address recently identified biases in SST".

Hi lapogus

I don't really know about what NOAA and GISS do I'm afraid!

BTW There is an interesting and relevant post by Ed Hawkins at Reading University on his blog climate-lab-book.ac.uk

Cheers

Richard

And, the updated land temperature dataset (CRUTEM4) paper is now published:

http://www.agu.org/pubs/crossref/2012/2011JD017139.shtml

for those with access, to see what has been done.

Ed.

And... the CRUTEM4 data is available for free download:

http://www.cru.uea.ac.uk/cru/data/temperature/

cheers,

Ed.

HI,

It is very use full blog.Thanks for informative blog.