Bishop Hill

Bishop Hill The daft and the non-daft climate model runs

Jan 22, 2016

Jan 22, 2016  Climate: Models

Climate: Models Nic Lewis has published another fascinating article about the Marvel et al paper over at Climate Audit. I was particularly taken by the discussion of the GCM runs that lay behind Marvel et al's assessment of the effect of land-use changes.

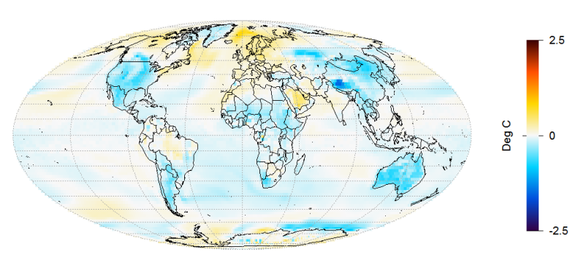

In essence, the authors did five runs of the model, with only land-use forcing changes. This tends to produce a cooling, and four of the runs gave similar results, with their average looking like this:

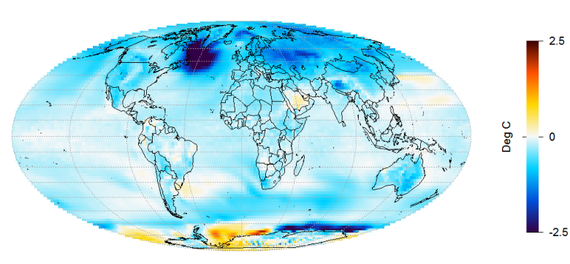

But one looked entirely different; like this:

I don't think they saw that coming! Apparently nobody has the faintest idea why this should have come to pass, but the authors do not think the run was "pathological". According to Nic's article, this kind of rogue run is not unprecedented either.

Does this then mean that what hits the public realm is actually "GCM runs that don't look daft"? Either way, it doesn't leave you with a warm feeling, does it?

Reader Comments (23)

Bit like the UK opinion polls last year then. The ones that turned out to have been accurate were binned because they didn't look right.

GCMs, lateral cell dimension ~100 km, model at 10^8 x ~1 mm characteristic length for turbulent flow in 1 Atm. air. After a few days they deviate from reality because of chaos. To assemble averages of many runs does not improve the outcome.

It'll take ~650 years before CPU power will rise sufficiently. They must also stop using ~double real low level cloud optical depth to give imaginary 'positive feedback' and correct the two bad radiative physics' mistakes made in 1976. Apart from that this academic make-work scheme for scientific wannabes is fine so long as the public don't get to know the truth....:o)

Reminds me about what happened with the BBC sponsored Climate Prediction.net. They had all sorts of rubbish outputs such as Snowball Earth that they dismissed as unrealistic. However runs that produced results of 10 degrees increase per doubling of CO2 were somehow ok. They then produced headlines about the extreme range they could predict and how we should be alarmed.

What really grates for me is that in public presentations climate models are presented as some kind of quite complicated but utterly deterministic Meccano gearbox - you turn the handle and according to the gears selected the same result comes out every time (validation not required) The ghastly Gavin being a particularly smug/sneery proponent of this.

The very idea that quite simple mechanical configurations like say a triple pendulum can exhibit chaotic behaviour in not something that many in the field of science communication are comfortable with.- and I suspect that chaos is in fact profoundly disturbing to some and perceived as a threat by many others seeking to impose their dogma on the way to total control....

The distaste for validation by many modelers (you know the subject areas) and the near total refusal to indicate how broad the range of model outcomes was before they actually picked one tells it's own story that simply isn't addressed by most of the commentary and promotion...

As others have said - and it bears repeating endlessly - an unvalidated model is simply an illustration of a hypothesis - the only time it actually gets to be skillful is if it passes validation and repeatedly predicts nature with an acceptable level of accuracy / repeat-ability.

Virtual Reality Climate Models sell better. Blue colours on maps aren't very warming are they?

And that's just the anomaly. The absolute temperatures look daft for all 5 runs with Giss; the least spatially accurate model of them all.

Note the North Atlantic cold spot in the "rogue" Marvel et al run.

Now look at the North Atlantic cold spot in the NOAA 2015 data

That looks too similar to be coincidence.

Entropic man,

Looks can be deceiving. If you count the one part that matches up you also have to count all the other parts that don't.

It's the old XKCD Jelly Beans problem.

Besides, the NOAA 2015 data has been corrupted by so much observer bias that it's worthless anyway.

There's a reason that respectable scientists use double-blind trials.

@ Sean OConnor

"Bit like the UK opinion polls last year then. The ones that turned out to have been accurate were binned because they didn't look right.

Jan 22, 2016 at 10:43 AM | Sean OConnor"

And of course a certain type of voter apparently "didn't answer the phone" - which is akin to satellite data not being "heard".

I think that second graph shows what happened to the Vikings in Greenland. They should have used a different model...

That's the magic of modeling.

You get to rapidly produce results at the click of button while the experimentalists are still putting their lab coats on. Which means you can also sweep a lot more 'rogue' results under the carpet and pretend they never happened.

Jan 22, 2016 at 5:43 PM | michael hart

"....get to rapidly produce results at the click of a button..."

Like heck! These models pound away for aeons, using vast amounts of processing power (and vast amounts of electricity, but all from approved sources, natch). Then they produce utter twaddle and Slingo (or whoever) demands a new supercomputer, courtesy of the taxpayer. Odd: the models are always the Delphic Oracle, until the Met Office, GISS, NOAA, or whoever suddenly wants an even more expensive toy to play with.

"Now look at the North Atlantic cold spot in the NOAA 2015 data

That looks too similar to be coincidence."

Actually they are not very similar at all. The NOAA "cold spot" is in the Gulf Stream SW of Iceland. The GCM cooling is in the Irminger Current and East Greenland Current.

How is a model run different from a monkey tapping on a keyboard? We can get a climate prediction, and we can get a Shakespeare. Odds are comparable.

Oddly, "pathological" was the first word that popped into my mind, unbidden.

As Daft DYI Graphs constitute most of the roughage in the diet of denial, the unrecognizable outliers generated when hundreds of model runs are done should hardly come as a surprise-- unless you've never used Monte Carlo methods to calibrate physical models.

"Unrecognisable outliers"

Haha

Russel, the idea of using monte carlo for climate prediction makes less sense than using it to predict next weeks lottery numbers.

Ernest Rutherford once said to a young scientist, after he had presented a statistical analysis of an experiment, "If you had chosen a better experiment, you wouldn't have had to use statistics.".

Climate modellers must accept the models depend on a basic mistake by R D Cess (1976), when he claimed the ratio of OLR to Earth's surface exitance was the Planet's emissivity. Total fail; any professional will confirm OLR is a highly distorted, almost black body IR emitter. ~3.3 (W/m^2.K) 'Planck Response' is a fabrication and there was 50% more energy than reality.. To pretend there is an Enhanced GHE, the Alchemists have spent 40 years inventing clever deceits.

Comparing the averaged map with the rogue map, the least difference is at the Equator. Nearer the Poles, lows become lower and highs become higher.

This looks like a data entry error, or an arithmetic error. Were it my experiment I would not publish until the error was explainable and reproducible.

clivere: "BBC sponsored Climate Prediction.net"

Yes, that was the great Myles Allen, "Towards the Trillionth Tonne" and promoter of "keep it in the ground". Also on the Executive Board of Environmental Research Letters, http://iopscience.iop.org/1748-9326/page/Editorial%20Board, with Peter Gleick and Stefan Rahmstorf.

Anyone from the BBC flying to New York or Washington any day this week good luck.

Says the worse Winter storm to hit America EVAR

So Seasonal Snow is called a storm now.

Actually that climateprediction.net experiment was the only one I am aware of where any kind of sensitivity test of the input variables was conducted. I know Giss have never done it because I directly asked Gavin and he replied that doing so 'wouldn't be useful'. What this test should have told them is that the huge error margins of the inputs utterly invalidate any notion that the models can ever have predictive value and hence cannot be useful for policy or indeed for much useful experimentation. Nature proved that in the end but it was very obvious to those of us who program such numerical models and deal with them all day long.

Russel (from his utterances on the subject) has undoubtedly never been near any such physical model and the Monte Carlo method is something he likely only ever read about. Such methods though are the only plausible way of combining models; not pre-selecting half a dozen model runs that are broadly similar and then declaring this batch as 'robust', which is merely a pseudo-Bayesian approach based on hopelessly biased priors combined with a historically-ignorant philosophy that the temperature of the Earth cannot change much without CO2 or aerosols (it's magic fossil-fuel counterpart that appears whenever needed to explain away any cooling period that just shouldn't happen by their simplistic 2-variable, linear thinking).