Bishop Hill

Bishop Hill A report from the Royal

Oct 2, 2013

Oct 2, 2013  Climate: IPCC

Climate: IPCC  Climate: WG2

Climate: WG2  Royal Society

Royal Society The Royal Society is holding a two-day meeting to discuss the Working Group I report of the IPCC. Reader Katabasis was there and send this report.

So the first day of the meeting at the Royal Society to discuss the IPCC AR5 report was quite an eye-opener .

.

The tone was set from the start with the first two speakers wringing their hands over the issue of "communicating the message", pointing out that sceptics were apparently "very good" at it. According to Mark Walport, chief scientific adviser to the Government, we sceptics (sorry, those who "deny the science") are "single issue, great communicators." Thomas Stocker followed in the second talk emphasising the "Key 19 messages" in the SPM report.

Bob Ward of the Grantham Institute, in the audience, stood and made some handwaving point that included "knowing that there were sceptics here who managed to get a ticket". He cast his arm around to take in the whole audience as he said this and I wondered if he thought we were like hidden bodysnatchers amongst the innocent attendees that everyone needed to be wary of.

I made lots of notes during the day; however, in the interests of brevity I'll just mention a few highlights and then go into more detail regarding the final talk that really demonstrated the shocking discrepancy between the IPCC's actual position and the media's hysterical portrayal of it.

The day was, as expected, extremely model heavy. When actual observations were superimposed upon model projections they were often embarrassingly small relative to the "data" supposedly being presented. Model outputs were continually referred to as "data", including outputs that refer to something in the future.

Several speakers referred to claims that global warming has stopped. They said this claim was untrue and that in fact warming was continuing, it had just slowed down. This is despite the fact that in section B.1 of the SPM, it says: "the rate of warming over the past 15 years (1998–2012; 0.05 [–0.05 to +0.15] °C per decade)"

There was a palpable air of many having been rattled by "the hiatus". Excuses ranged from aerosols to soot to natural variability. One of the latter invocations caused an intervention from the Met Office's Julia Slingo - she pointed out that the PDO could lead to no further warming for up to 30 years, "so we're not out of the woods yet". I thought that was a particularly curious turn of phrase given how so many dedicated alarmists tell me they would be overjoyed if no global warming came to pass.

Throughout the day though, having read through the SPM a third time, and taken in more layers of official IPCC positions I became increasingly conscious of the greatly diminshed role of feedbacks. I checked through the SPM one more time, looking at each reference to "feedback". No I wasn't imagining it, it is very muted. Suddenly the focus of almost every presentation on predictions for the year 2100 made more sense. We'd previously been promised fire and brimstone long before that. The IPCC it seems, was actually climbing down from this position, though I didn't quite grasp how profoundly until the final talk by Matt Collins on 'What is the chance of abrupt or irreversible changes?':

What "Abrupt" and "Irreversible" mean in IPCC speak

AR5 has apparently introduced very specific definitions for the above.

Inigo Montoya, in the film, 'The Princess Bride', says to one of the main antagonists, “You Keep Using That Word, I Do Not Think It Means What You Think It Means”, after he says "Inconcievable!" for the nth time that his plans are foiled. 'Abrupt' and 'Irreversible' will obviously be reported by the media in quite lurid terms. They won't match the reality of what the IPCC means by them unless the journalist as done their homework. Both definitions can be found in the section 'TFE.5: Irreversibility and Abrupt Change' in the draft report and are worth repeating in full here:

"Abrupt climate change is defined in AR5 as a large-scale change in the climate system that takes place over a few decades or less, persists (or is anticipated to persist) for at least a few decades, and causes substantial disruptions in human and natural systems."

Is that quite what you thought it would mean? No, me neither. Wait, there's more:

A change is said to be irreversible if the recovery timescale from this state due to natural processes is significantly longer than the time it takes for the system to reach this perturbed state.

Abrupt changes that aren't really abrupt and irreversible changes that aren't really - er - irreversible.

Catastrophe? Er - we don't know.

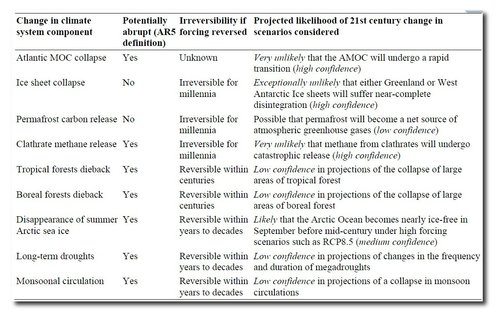

Matt Collins began his talk disarmingly by saying that it could well have been very short - he could have just got up on stage and said "we don't know". I'm glad he didn't because otherwise I would not have been made aware of Table 12.4 in the draft report. It lists all of the catastrophic scenarios that many of us have become used to hearing wailed over by the media and associated directly with the notion of "strong positive feedbacks". Collins showed us the table and went through the details of two of the entries as examples of just how uncertain this area was (click image for full size).

What? Yes that's right. The real story may not be in the IPCC rowback on temperature ranges, or its cack-handed "explanations" for the stalling temperatures. It may in fact all be in this table. Be sure to look for yourself. Every single catastrophic scenario bar one has a rating of "Very unlikely" or "Exceptionally unlikely" and/or has "low confidence". The only disaster scenario that the IPCC consider at all likely in the possible lifetimes of many of us alive now is "Disappearance of Arctic summer sea ice", which itself has a 'likely' rating and liable to occur by mid century with medium confidence. As the litany of climate disasters go, that's it.

What? Yes that's right. The real story may not be in the IPCC rowback on temperature ranges, or its cack-handed "explanations" for the stalling temperatures. It may in fact all be in this table. Be sure to look for yourself. Every single catastrophic scenario bar one has a rating of "Very unlikely" or "Exceptionally unlikely" and/or has "low confidence". The only disaster scenario that the IPCC consider at all likely in the possible lifetimes of many of us alive now is "Disappearance of Arctic summer sea ice", which itself has a 'likely' rating and liable to occur by mid century with medium confidence. As the litany of climate disasters go, that's it.

This prompted me to put a question to him, which was the first I'd been able to raise via the chair all day (I'd tried in several talks). I said to Matt:

"What the IPCC says, and what the media says it says are poles apart. Your talk is a perfect example of this. Low liklihood and low confidence for almost every nightmare scenario. Yet this isn't reflected at all in the media. Many people here have expressed concern at the influence of climate sceptics. Wouldn't climate scientists' time be better spent reining in those in the media producing irresponsible, hysterical, screaming headlines?"

Tumbleweed followed for several seconds. Then Matt said:

"Not my responsibility".

Reader Comments (128)

Tamsin, thank you!

For those interested in clouds Willis Eschenbach has a post over at WUWT where he sums up as follows

the link is http://wattsupwiththat.com/2013/10/03/the-cloud-radiative-effect-cre/#more-95082

Sceptic note: this is citizen science and to be subjected to the same scrutiny as mainstream science.

Cumbrian Lad

I recall a forecast 2 years ago that a 21st century Maunder Minimum event would:-

"In our study we find that a new Maunder Minimum would lead to a cooling of 0.3°C in the year 2100 at most – relative to an expected anthropogenic warming of around 4°C. (The amount of warming in the 21st century depends on assumptions about future emissions, of course)."

....... According to these results, a 21st-century Maunder Minimum would only slightly diminish future warming."

" What if the Sun went into a new Grand Minimum "

So if a Maunder Minimum event, lasting decades can "only slightly diminish future warming" just what magnitude of effect can be attributed to "the weakness of the minimum a few years ago.."

If it has had a significant effect in contributing to the "hiatus"? Then what could we expect from a full blown 21st Century Maunder type event ?

I don't know the answer and I suspect that nobody else does! But the two claims appear counterintuitive.

I should also make it clear that I do not know whether or not there will be a Maunder or any another type of extended minimum in the near future.

As always only time will tell, but if a minimum does occur I suspect that Georg Feulner's closing comment from the RC post:-

..maybe quite wide of the mark as I am sure it will be very much the center of interest for an awful lot of non-solar physicists:-)

Tamsin, even the new version of the disputed graph shows all the models running north of the observations. Never mind the fallacy of averaging different models, where I agree with RGB at Duke, but if all the models are appearing (even over a limited period) to run hot why not chuck out the worst ones? I reckon we out here do not see all the runs. Are there examples where they run cold and wise heads in the modelling community have censored them? Are there hot outliers which are similarly dismissed? Are there part-run criteria for premature termination?

Anyhow, why not chuck out the worst-performing models? Are you expecting the observations to somehow catch up with the highest-running spaghetti strands? Over what timescale do you want to work?

In climate science internal politics, is it possible to chuck out a model for poor performance?

Do you (the modelling community, that is) ever give any time to considering that they all run hot because there is a common wrong premise in the treatment of the physics? Because the other explanation is that the world is wrong.

Can someone please tell me the average surface air temperature of the mid Atlantic ocean in the year 1267 ?

I need it for my new model. After all 70% of the earth's surface is water.

Thank you.

Re: Oct 4, 2013 at 10:36 AM | Tamsin Edwards

"Before I start it's probably worth linking to the IPCC report (all chapters, including Technical Summary):

http://www.climatechange2013.org/report/review-drafts "

Hi Tamsin, Your link doesn't seem to work...

Withdrawn already ???.................

WUWT has it -

http://wattsupwiththat.com/2013/09/30/ipcc-ar5-full-final-report-released-full-access-here/#more-94902

Re: Oct 4, 2013 at 2:13 PM | Marion

>>http://www.climatechange2013.org/report/review-drafts

>>Hi Tamsin, Your link doesn't seem to work...

I think it needs a final '/', i.e.

http://www.climatechange2013.org/report/review-drafts/

And for those interested in the science - the NIPCC version -

http://climatechangereconsidered.org/

Re: Oct 4, 2013 at 2:31 PM | Tim Osborn

Thanks ;-)

Green Sand - be careful of the terminology; the Maunder minimum was a period when the solar maximum was lower than usual. We are currently at the top of the 11 year cycle (solar max) which is also a reduced maximum compared to usual. (Hence the wondering if we'll see another event such as the Maunder minimum, i.e. a series of low maxima).

Tamsin spoke of the "minimum a few years ago" and so seems to be referring to the baseline level, hence my earlier comment. She may of course be talking about the low max, but that's not what she said. As you point out no one knows if the next cycle will be a weak one or not, but even if it's strong, it's not due to hit max for 11 years, and would be precious little help in alleviating the current 'hiatus'.

Tasmin: "are you saying you wouldn't class the PDO as a mode of internal variability, or that modes of internal variability can generate energy?"

Is this an argument that the net state of the PDO must return to a baseline? If so, then I would very much appreciate a reference to something that expresses this in writing so that I can cite it.

Talking of RGB at Duke - he's posted another excellent comment at Climate Audit, full of good old fashioned 'common sense' so utterly lacking it would seem in many of our climate science community, really worth reproducing here in full -

(in fact the whole article and all the comments are well worth reading!!)

Re Figure 1.4: Estimated changes in the observed globally and annually averaged surface temperature anomaly relative to 1961–1990 (in C) since 1950 compared with the range of projections from the previous IPCC assessment

"RGBATDUKE

Posted Oct 3, 2013 at 3:30 PM | Permalink | Reply

"... — if I were going to do an actual post on it, I’d only feel comfortable doing so if I had the actual data that went into 1.4, so I could extract the strands of spaghetti, one at a time. As it is, I can only see what a very few strands of colored noodles do, as they are literally interwoven to make it impossible to track specific models. For example, at the very top of the figure there is one line that actually spends all of its time at or ABOVE the upper limit of even the shaded line from the earlier leaked AR5 draft. It is currently a spectacular 0.7 to 0.8 C above the actual GAST anomaly. Why is this model still being taken seriously? As not only an outlier, but an egregiously incorrect outlier, it has no purpose but to create alarm as the upper boundary of model predicted warming, one that somebody unversed in hypothesis testing might be inclined to take seriously.

But then it is very difficult to untangle the lower threads. A blue line has an inexplicable peak in the mid-2000′s 0.6 C warmer than the observed temperatures, with all of the warming rocketing up in only a couple of years from something that appears much cooler. Not even the 1997-1998 ENSO or Pinatubo produce a variation like this anywhere in the visible climate record. This sort of sudden, extreme fluctuation appears common in many of the models — excursions 2 or three times the size of year to year fluctuations in the actual climate, even during times when the climate did, in fact, rapidly warm over a 1-2 year period.

This is one of the things that is quite striking even within the spaghetti. Look carefully and you can make out whole sawtooth bands of climate results where most of the GCMs in the ensemble are rocketing up around 0.4 to 0.5 C in 2-3 years, then dropping equally suddenly, then rocketing up again. This has to be compared to the actual annual variation in the real world climate, where a year to year variation of 0.1 or less is typical, 0.2 in a year is extreme, and where there are almost no instances of 3-4 year sequential increases.

I have to say that I think that the reason that they present EITHER spaghetti OR simple shaded regions against the measurements isn’t just to trick the public and ignorant “policy makers” into thinking that the GCMs embrace the real world data, it is to hide lots of problems, problems even with the humble GAST anomaly, problems so extreme that if they ever presented the GCM results one at a time against the real world data would cause even the most ardent climate zealot to think twice. Even in the greyshaded, unheralded past (before 1990) the climates have an excursion and autocorrelation that is completely wrong, an easy factor of two too large, and this is in the fit region.

Autocorrelation matters! In fact, it is the ONLY way we can look at external macroscopic quantities like the GAST anomaly and try to assess whether or not the internal dynamics of the model is working. It is the decay rate of fluctuations produced by either internal feedbacks or “sudden” change in external forcings. In the crudest of terms, many of the models above exhibit:

* Too much positive feedback (they shoot up too fast).

* Too much negative feedback (they fall down too fast).

* Too much sensitivity to perturbations (presuming that they aren’t whacking the system with ENSO-scale perturbations every other year, small perturbations within the model are growing even faster and with greater impact than the 1997-1998 ENSO, which involved a huge bolus of heat rising up in the pacific).

* Too much gain (they go up more on the upswings than the go down on the downswings, which means that the effects of overlarge positive and negative oscillations biases the trend in the positive direction.

That’s all I can make out in the mass of strands, but I’m sure that more problems would emerge if one examined individual models without the distraction of the others.

Precisely the same points, by the way, could be made of and were apparent in the spaghetti produced by individual GCMS for lower troposphere temperatures as presented by Roy Spencer before congress a few months ago. There the problem was dismissed by warming enthusiasts as being irrelevant, because it only looked at a single aspect of the climate and they could claim “but the GASTA predictions, they’re OK”. But the GASTA predictions above are the big deal, the big kahuna, global warming incarnate. And they’re not OK, they are just as bad as the LTT and everybody knows it.

That’s the sad part. As Steve pointed out, they acknowledged the problem in the leaked release, we spent another year or two STILL without warming, with the disparity WIDENING, and their only response is to pull the acknowledgement, sound the alarm, and obfuscate the manifold failures of the GCMs by presenting them in an illegible graphic that preserve a pure statistical illusion of marginal adequacy.

Most of the individual GCMs, however, are clearly NOT adequate. They are well over 0.5 C too warm. They have the wrong range of fluctuation. They have absurd time constants for growth from perturbations. They have absurd time constants for decay from perturbations. They aren’t even approximately independent — one can see bands of similar fluctuations, slightly offset in time, for supposedly distinct models (all of them too warm, all of them too extreme and too fast).

Any trained eye can see these problems. The real world data has a completely different CHARACTER, and if anything, the problems WORSEN in the future. I cannot imagine that the entire climate community is not perfectly well aware of the “travesty” referred to in Climategate, that the models are failing and nobody knows why.

Why is honesty so difficult in this field? As Steve Mosher pointed out, none of this should ever have been used to push energy policy or CAGW fears on an unsuspecting world. It is not (as he seems to finally be admitting) NOT “settled science”. It’s not surprising that models that try to microscopically solve the world’s most difficult computational physics problem get the wrong answer across the board — rather, it’s perfectly reasonable, to be expected. If it weren’t for the world-saving heroic angst, the politics, and the bags full of money, building, tuning, fixing, comparing the models would be what science is all about, as Steve also notes.

So why not ADMIT this to a world that has been fooled into thinking that the model results were actually authoritative, bombarded by phrases like “very likely” that have no possible defensible basis in statistical analysis?

All they are doing in AR5 figure 1.4 is delaying the day of reckoning, and that not by much. If its information content is unravelled, strand by strand, and presented to the world for objective consideration, all it will succeed in doing is proving beyond any doubt that they are, indeed, trying to cover up their very real uncertainty and perpetuate for a little while longer the illusion that GCMs are meaningful predictors and a sound basis for diverting hundreds of billions of dollars and costing millions of lives per year, mostly in developing countries where increased costs of energy are directly paid for in lives, paid right now, not in a hypothetical 50 years. I think they are delaying on the basis of a prayer. They are praying for another super-ENSO, a CME, a huge spike in temperature like the ones their models all produce all the time, one sufficient to warm the world 0.5C in a year or two and get us back on the track they predict.

However, we are at solar maximum in solar cycle 24 at a 100 year low, and the coming minimum promises to be long and slow, with predictions of an even lower solar cycle 25. We are well into the PDO at a point in its phase where in the recent past the temperature has held steady or dropped. Stratospheric water vapor content has dropped and nobody quite knows why, but it significantly lowers the greenhouse forcing in the water channel (I’ve read NASA estimates for the lowering of sensitivity as high as 0.5C all by itself). Volcanic forcings appear to have been heavily overestimated in climate models (and again, the forcings have the wrong time constants). It seems quite likely that “the pause” could continue, well, “indefinitely” or at least until the PDO changes phase again or the sun’s activity goes back up. Worse yet, it might even cool because GCMs do not do particularly well at predicting secular trends or natural variability and we DO NOT KNOW what the temperature outside “should” be (in the absence of increased CO_2) in any way BUT from failed climate models.

So sad.

So expensive.

rgb "

Comment from -

http://climateaudit.org/2013/09/30/ipcc-disappears-the-discrepancy/

Thanks, Marion! I am a big fan of RGB's comments, he has a talent for explaining complex matters in ways that make sense to me (of course I know one has to be on guard for comments that merely please one's predilections etc. but he really seems to understand what's going on). Here is a clickable link to his last comment:

new comment on Climate Audit from RGB at Duke

Oct 4, 2013 at 3:17 PM | Registered CommenterMikeHaseler

Below is a quotation from an article by a Phd student that Judith Curry published at her site. I refer you to this article because it sets forth so clearly how ENSO and similar matters are handled in models. To use the terminology of the article, ENSO is like a dog on a leash. The leash represents the requirement that ENSO can vary, run warm or cool, only within the constraints of the leash because greater variance would violate energy balance. In my mind, that supports my point that temperature evidence is made to conform to higher level postulates about energy balance and the empirical element is suppressed. Of course leashes can vary in length so the empirical element is not totally suppressed.

Global temperature can also change due to “internally generated variability”; also known as “unforced variability”. This type of variability is due to the ocean-atmosphere system essentially changing itself through its own chaotic motion. The most well-known mode of unforced variability is called the El-Niño Southern Oscillation (often referred to as the El-Niño, La-Niña cycle). During La-Niña years, heat is buried in the deep Pacific Ocean more readily and thus the global mean temperature at the earth’s surface is a bit lower than it would be otherwise. During El-Niño years, this buried heat is released back to the surface and the global mean temperature tends to be a little warmer than it would be otherwise. In this situation, global mean temperature at the earth’s surface is changing because energy is being moved around the climate system (into and out of the deep ocean) not because there is a net change in the total amount of energy in the system."

http://judithcurry.com/2013/07/13/unforced-variability-and-the-global-warming-slow-down/

For a more technical discussion and published papers, check out Judith Curry’s post which contains this response from author/scientist Xie in which he explains why Curry is treating the PDO as too important:

“I have a different take on this. The IPCC conclusion applies to centennial warming from 1880. Much of the 0.8 C warming since 1900 is indeed due to anthropogenic forcing, because natural variability like PDO and AMO has been averaged out over this long period of time.

Our results concern the effect of tropical Pacific SST on global mean temperature over the past 15 years. It is large enough to offset the anthropogenic warming for this period, but the effect weakens as the period for trend calculation gets longer simply because it is oscillatory and being averaged out.”

http://judithcurry.com/2013/08/28/pause-tied-to-equatorial-pacific-surface-cooling/

Cumbrian Lad

"Green Sand - be careful of the terminology"

No worries Cumbrian, I am quite aware Tamsin was referring to the minimum between cycle 23 and 24 which was both lower and longer than many cycles previously. But if one single lower and longer minimum can have a significant effect which appears to be the point Tamsin is making then what are we to expect from a new Grand Minimum? RC are forecasting "only slightly diminish future warming"

It was the apparent change of thought over a short 2 year period that gained my attention.

Oct 4, 2013 at 11:16 AM | Unregistered CommenterTamsin Edwards

"To clarify - by "This assumption comes from the very high level principle that phenomena such as the PDO cannot generate energy" - are you saying you wouldn't class the PDO as a mode of internal variability, or that modes of internal variability can generate energy?

and why do you say: "However, in making the assumption, modelers rule out all empirical investigation of the phases of the PDO"?"

To clarify, I am saying that climate modelers constrain the various modes of internal variability by applying the high level principle that individual modes cannot generate energy. When modelers attempt to model something that is somewhat cyclic such as the PDO they require that observed temperature variations must sum to near zero over the long run. My objection to this practice is that it has become automatic for modelers and inclines them to overlook observed temperatures.

As regards the PDO specifically, I doubt that a close look at the data could justify the claim that the PDO is cyclic. Rather it is somewhat cyclic. To resolve this "somewhat cyclic nature" what is needed is closer empirical investigation of the PDO that would reveal truly cyclic sub-processes that make it up. Once these are identified then you can apply your conservation of energy principle to them.

Modelers should carefully distinguish between "internal variability" which is internal to the model and "natural variability" which is in nature and must be revealed through empirical investigation. Modelers quite automatically treat the PDO as an example of "internal variability" and then tend to think they have done justice to its "natural variability."

These questions came up recently at Judith Curry's blog in a discussion of "'Recent global warming hiatus tied to equatorial Pacific surface cooling' by Yu Kosaka and Shang-Ping Xie." Professor Xie offered the following comment to Dr. Curry:

“I have a different take on this. The IPCC conclusion applies to centennial warming from 1880. Much of the 0.8 C warming since 1900 is indeed due to anthropogenic forcing, because natural variability like PDO and AMO has been averaged out over this long period of time.

Our results concern the effect of tropical Pacific SST on global mean temperature over the past 15 years. It is large enough to offset the anthropogenic warming for this period, but the effect weakens as the period for trend calculation gets longer simply because it is oscillatory and being averaged out.”

It seems quite apparent that Dr. Xie is not averaging over "natural variability" but averaging over "internal variability." In doing so, he is simply telling us how his model operates but telling us nothing about the PDO.

I do not expect a modeler to know all of modeling or to be interested in all of modeling. I pose these questions to you because they might "ring a bell" for you. If not then I am not disappointed. Thank you for your always interesting contributions to this blog.

To think a cyclical or oscillating phenomenon averages out to zero may be intuitively correct. It isn't necessarily so though.

Oh, and Tamsin doesn't need to answer my fumbling queries, but if anybody from the other side would like to reply to RGB I'd like to hear that. I like the way RGB writes.

Re: Oct 4, 2013 at 3:44 PM | Skiphil

"I am a big fan of RGB's comments, he has a talent for explaining complex matters in ways that make sense to me"

Re: Oct 4, 2013 at 7:55 PM | rhoda

"if anybody from the other side would like to reply to RGB I'd like to hear that. I like the way RGB writes "

Me too. guys (and thanks for the link, Skiphil). RGB has a wonderfully lucid way of writing in stark contrast to the IPCC reports and their penchant for changing definitions.

For example their changed definition of 'Climate Change' to mean 'anthropogenic climate change'. And now the revised meanings of 'abrupt' and 'irrevesible' that Katabasis has so ably highlighted in his excellent report above.

No doubt there will soon be questionnaires going out to the public as to whether the government should take steps to avoid 'abrupt' and 'irreversible' climate change and of course the IPCC redefinitions will be nowhere in sight!!!

Utterly dishonest and meant to deceive it would appear to me! Words simply cannot express the contempt I feel for those willing to go along with this charade!

Further to my post upthread about Willis Eschenbach it appears my final caveat was prescient. Willis has updated the post as follows

@ Marion

By their weasel words Ye shall know them

(and their weasel deeds)

Thanks for the rgbatduke comments link at Climate Audit, Marion. I try to check CA regularly, but wasn't up to date.

Once again it is a case of: "Mr Nail, meet Mr Hammer, as wielded by Robert Brown at Duke." He answers several questions I had thought of asking, and poses others that I hadn't thought of.

Any student seeking a quality science training should seek him out. It may not make them rich or famous or successful. But if they wish to learn science, then he is a go-to person. I think he may be one of the very few, and I've met another one.

Tamsin,

Do you still plan to get back to us on the great "The deep ocean ate all the heat" mystery. currently baffling sceptics the world over? Please do :-)

Tamsin Oct 3 12:16

Tamsin, a model which, starting today, does not predict the future, is, I am sorry to say, totally worthless.

A model which has been fitted to the past and yet does not predict the future is also, I am sorry to say, totally worthless.

A model which has been found faithfully to reflect the past and which with the passage of time is found also faithfully to reflect the then future is a model in which we can have guarded confidence.

Consign anything else to the flames and start again.

Oct 5, 2013 at 7:44 PM | simon abingdon

Nota bene. Models do not "predict". They project what will happen when you enter values into them for the input parameters. If there are certain assumptions built into the models, as well, these will be reflected in the output. There should in reality be no assumptions - all variables should be able to be input as parameters, and then, if the output does not reflect real world observations, the models need either to be junked or improved. The fact is that the IPPC models are not fit for purpose. And that what has been done as a result of these models has cost us a fortune and helped to further impoverish the Third World.

Also, what should be clearly stated is what the models are known not to handle - for example, do they handle cosmic rays, how many of the huge number of varying cycles that affect climate are programmed into them, who QA'ed the models (null and void if done by those who wrote them), and so on. Indeed, here's a list from someone involved in model programming of how they need to be qualified. I'd like to see this run by Jones et. al at CRU.

http://blog.squandertwo.net/2006/06/modelling.html

Who programmed the computer model?

Did the same person do the programming as did the science?

If not, how was the science communicated from the scientist to the programmer? Are you confident that the programmer fully understood the science?

If more than one person programmed the model, do they all have the same background in and approach to programming?

If they have different backgrounds or approaches, what did you do to ensure that their contributions to this project would be compatible and consistent?

What proportion of total programming time was spent on debugging?

Was all the debugging done by the same person?

If not, was there a set of rules governing preferred debugging methods?

If so, are you sure everyone followed said rules to the letter?

Did any of the debugging involve putting in any hacks or workarounds?

If not, could you pull the other one, which has bells on?

Is there any part of the program which just works even though it looks like it probably shouldn't?

Are there any known bugs in the computer hardware or operating system that you run your model on?

If so, what have you done to ensure that none of those bugs affects your model?

What theories did you base the model on?

What proportion of these theories are controversial and what proportion are pretty much proven valid?

What information did you put into the model?

Where did this information come from?

How accurate is the information?

Have you at any point had to use similar types of information from significantly different sources? Have you, for instance, got some temperature data from thermometers and some other temperature data from tree rings?

If so, what have you done to ensure that these different data sources are compatible with each other?

If you've done something to make different data sources compatible, did it involve using any more theories to adjust data? If so, see the previous questions about theories.

Where you couldn't get real-world information, what assumptions did you use?

What is your justification for those assumptions?

Do any other scientists in your field tend to use different assumptions?

Have any of your theories, information, or assumptions led to inaccurate predictions in the past?

If so, why are you still using them?

If they previously led to inaccurate predictions, do you know why?

If you think you know why they led to inaccurate predictions, what else have you done to test them before using them in this model?

How many predictions has your computer model led to that have been verified as accurate in the real world?

How accurate?

Has any other computer model used roughly the same theories, assumptions, and data as yours to give significantly different conclusions?

If so, do you know why the conclusions were different?

How much new information has your computer model given you?

Jeremy, I'm not talking about software fidelity issues. They've been screwing things up (often unnoticed) since programs were first written.

The climate scientists' excuse that models only project what might happen were we to increase x by p% and decrease y by q% is of absolutely no interest to us. We want to know about a model which month by month and year by year faithfully tracks predictively what is then seen to have happened in retrospect.

We don't want to know about experiments with parameter tweaking. We want a system of climate prediction that works, demonstrably and believably so. Throw the toys away.

Oct 6, 2013 at 3:51 PM | simon abingdon

====================================

All models involve parameter tweaking. That's the name of the game. I think however, we in the end both want the same thing. Models which reflect reality. That we have not got, by a long streak. And when I discuss climate science with believers, I always ask them - which sciences puts results from models above results from real world data. The answer is usually a series of huffy noises.

On an earlier point - it looks like Tamsin is not going back to us to explain how all this missing heat made its way to the deep oceans unrecorded by Argos.

Where is Al Gore when we really need him?

I'm sure he could explain it. All of it.

I'd love to know how much $$$ he made, and

lost, from all of the self-serving hysteria.

And she's gone. Now we are on page 2 and our questions will I suppose remain unanswered. Ah well.