Bishop Hill

Bishop Hill The only way is Essex

Feb 20, 2015

Feb 20, 2015  Climate: Models

Climate: Models  Climate: Sceptics

Climate: Sceptics  GWPF

GWPF GWPF have posted a new climate talk, this time given by Christopher Essex. No time to look at it myself, but it's here for those who are interested.

Books

Click images for more details

A few sites I've stumbled across recently....

Bishop Hill

Bishop Hill  Feb 20, 2015

Feb 20, 2015  Climate: Models

Climate: Models  Climate: Sceptics

Climate: Sceptics  GWPF

GWPF GWPF have posted a new climate talk, this time given by Christopher Essex. No time to look at it myself, but it's here for those who are interested.

Bishop Hill

Bishop Hill  Feb 16, 2015

Feb 16, 2015  Climate: Models

Climate: Models  Climate: Surface

Climate: Surface  Climate: WG2

Climate: WG2  Royal Society

Royal Society In the wake of the Royal Society's recent quick guide to climate change, the Australian Academy has produced their own newbies' guide which can be seen here.

It contains some interesting bits and bobs, for example this bit on extreme rainfall.

Heavy rainfall events have intensified over most land areas and will likely continue to do so, but changes are expected to vary by region.

Bishop Hill

Bishop Hill  Feb 8, 2015

Feb 8, 2015  Climate: Models

Climate: Models Marotzke and Forster have published a response to Nic Lewis's critique of their paper. It can be seen here, at Ed Hawkins' Climate Lab book site. Here's the start.

No circularity

It has been alleged that in Marotzke & Forster (2015) we applied circular logic. This allegation is incorrect. The important point is to recognise that, physically, radiative forcing is the root cause of changes in the climate system, and our approach takes that into account. Because radiative forcing over the historical period cannot be directly diagnosed from the model simulations, it had to be reconstructed from the available top-of-atmosphere radiative imbalance in Forster et al. (2013) by applying a correction term that involves the change in surface temperature. This correction removed, rather than introduced, from the top-of-atmosphere imbalance the very contribution that would cause circularity. We stand by the main conclusions of our paper: Differences between simulations and observations are dominated by internal variability for 15-year trends and by spread in radiative forcing for 62-year trends.

Unfortunately, when they continue to the section called "Specifics" I can't actually see any mathematics that purports to show that their original regression model was not circular. My impression is of handwaving. Steve McIntyre, in the comments at CA seems to have reached similar conclusions:

I’ve done a quick read of the post at Climate Lab Book. I don’t get how their article is supposed to rebut Nic’s article. They do not appear to contest Nic’s equation linking F and N – an equation that I did not notice in the original article. Their only defence seems to be that the N series needs to be “corrected” but they do not face up to the statistical consequences of having T series on both sides.

Based on my re-reading of the two articles, Nic’s equation (6) seems to me to be the only logical exit and Nic’s comments on the implications of (6) the only conclusions that have a chance of meaning anything. (But this is based on cursory reading only.)

I guess we will have to wait and see what Nic Lewis makes of it before reaching firm conclusions.

Bishop Hill

Bishop Hill

Piers Forster comments at Climate Lab Book.

Nic is right that deltaT does appear on both sides, we are not arguing about this we are arguing about the implications.

We see the method as a necessary correction to N, to estimate the forcing, F. This is what we are looking for in the model spread, not the role of N – it would be more circular to use N as this contains a large component of surface T response.

We know this method of diagnosing F work very well – e.g. see Fig 5 of Forster et al. 2013

http://onlinelibrary.wiley.com/store/10.1002/jgrd.50174/asset/image_n/jgrd50174-fig-0005.png?v=1&t=i5xs0usz&s=4575b4038f30bacc5c54f4474456faf952af926dWe only see it as a problem as it affects the partitioning of the spread between alpha and F. We find that this creates some ambiguity over the 62 year trends but not the 15 year trends.

Uncertainty in the partitioning is different than creating a circular argument. We simply don’t do this.

I'm not sure I'm any the wiser.

Bishop Hill

Bishop Hill  Feb 5, 2015

Feb 5, 2015  Climate: Models

Climate: Models A few days ago I noted a new paper by Marotzke and Forster which claimed to show that the recent divergence of model predictions and observations was all down to natural variability. The paper was getting considerable hype from Marotzke's employers, the Max Planck Institute:

Sceptics who still doubt anthropogenic climate change have now been stripped of one of their last-ditch arguments...the gap between the calculated and measured warming is not due to systematic errors of the models, as the sceptics had suspected, but because there are always random fluctuations in the Earth's climate.

Marotzke was also quoted as saying: "The claim that climate models systematically overestimate global warming caused by rising greenhouse gas concentrations is wrong" and he went on to get quite a lot of media coverage, including the Mail, the Sydney Morning Herald, Deutsche Welle, and the Washington Post.

Based on media coverage of the paper's contents, I expressed considerable concern over what the authors had apparently done. It seems, however, that my criticisms at the time were understated. It is in fact "worse than we thought".

Bishop Hill

Bishop Hill  Feb 2, 2015

Feb 2, 2015  Climate: Models

Climate: Models Mutual confirmation of models (simple or complex) is often referred to as ’scientific robustness’.

Alexander Bakker describes some of the axioms of climate modelling.

Bishop Hill

Bishop Hill  Jan 30, 2015

Jan 30, 2015  Climate: Models

Climate: Models I had an interesting exchange with Richard Betts and Lord Lucas earlier today on the subject of climate model tuning. Lord L had said put forward the idea that climate models are tuned to the twentieth century temperature history, something that was disputed by Richard, who said that they were only tuned to the current temperature state.

I think to some extent this we were talking at cross purposes, because there is tuning and there is "tuning". Our exchange prompted me to revisit Mauritzen et al, a 2012 paper that goes into a great deal of detail on how one particular climate model was tuned. To some extent it supports what Richard said:

Bishop Hill

Bishop Hill  Jan 29, 2015

Jan 29, 2015  Climate: Models

Climate: Models  Climate: sensitivity

Climate: sensitivity Readers may recall Jochem Marotzke as the IPCC bigwig who promised that the IPCC would not duck the question of the hiatus in surface temperature rises and then promptly ensured that it did no such thing.

Yesterday, Marotzke and Piers Forster came up with a new paper that seeks to explain away the pause entirely, putting it all down to natural variability. There is a nice layman's explanation at Carbon Brief.

For each 15-year period, the authors compared the temperature change we've seen in the real world with what the climate models suggest should have happened.

Over the 112-year record, the authors find no obvious pattern in whether real-world temperature trends are closer to the upper end of what model project, or the lower end.

In other words, while the models aren't capable of capturing all the "wiggles" along the path of rising temperatures, they are slightly too cool just as often as they're slightly too warm.

And because the observed trend over the full instrumental record is roughly the same as the model one we are cordially invited to conclude that there's actually no problem.

If Carbon Brief is reporting this correctly then it's hilariously bad stuff. Everybody knows that the twentieth century is hindcast roughly correctly because the models are "tuned", usually via the aerosol forcing. So fast-warming/big aerosol cooling models hindcast correctly and so do slow-warming/small aerosol cooling models. The problem is that the trick of fudging the aerosol data so as to give a correct hindcast can't be applied to forecasts. Reality will be what reality will be. The fact is we have models with a wide range of TCRs and they have all been fudged. Some might turn out to be roughly correct. But it it just as possible that they are all wrong. Given the experience with out of sample verification and the output of energy budget studies, it may well be that it is more likely than not that they are all wrong.

Bishop Hill

Bishop Hill  Jan 5, 2015

Jan 5, 2015  BBC

BBC  Climate: Models

Climate: Models  Climate: other

Climate: other Fifteen years ago this week the BBC's In Our Time show dedicated one of its shows to the subject of climate change (H/T Leo Hickman). In a break from its normal practice, Melvyn Bragg was joined by only two guests, only one of whom could even loosely be described as an academic. Sir John Houghton, the then chairman of the Intergovernmental Panel on Climate Change, would best be described as a scientific administrator, having previously been the chief executive of the Met Office; George Monbiot is of course an environmental campaigner and journalist, although for the occasion - perhaps hoping to be taken more seriously - he also described himself as visiting professor of philosophy at the University of Bristol.

2000 was an interesting time in the climate debate. With the world having just entered the third millennium, thoughts of catastrophic futures seem to have found fertile ground and the global warming scare was therefore starting to gain ground. The IPCC's Second Assessment Report had laid the foundations for the scare a few years before; the ink was barely dry on the Hockey Stick papers and the onslaught of the Third Assessment was not far away. This is the context for the BBC's decision to use an environmentalist and a environmentally minded bureaucrat to provide what the corporation calls "due balance".

Bishop Hill

Bishop Hill  Jan 5, 2015

Jan 5, 2015  Climate: Models

Climate: Models Here is a fascinating article from a blog I haven't come across before called "A Chemist in Langley":

...the vast majority of the warmist community have a worldview that stresses Type I [false positive] error avoidance while most skeptics work in a community that stresses Type II [false negative] error avoidance. Skeptics look at the global climate models and note that the models have a real difficulty in making accurate predictions. To explain, global climate models are complex computer programs filled with calculations based on science's best understanding of climate processes (geochemistry, global circulation patterns etc) with best guesses used to address holes in the knowledge base.

Bishop Hill

Bishop Hill  Jan 1, 2015

Jan 1, 2015  Climate: MetOffice

Climate: MetOffice  Climate: Models

Climate: Models Back in 2013 I wrote a report for GWPF about the official UKCP09 climate projections and Nic Lewis's discovery that the underlying model was incapable of simulating a climate that was matched the real one as regards certain key features of the climate system. The final predictions in UKCP09 were based on a perturbed physics ensemble: a weighted average of a series of climate model runs, each with different key parameters tweaked, with the weighting in the final reckoning determined by how well the virtual climate produced matched the real one. As Lewis revealed, since the climate model output couldn't match the real one, we were effectively being asked to believe that a weighted average of unrealistic virtual climates would nevertheless produce realistic predictions.

Bishop Hill

Bishop Hill  Dec 27, 2014

Dec 27, 2014  Climate: Models

Climate: Models Over the last week or so I've been spending a bit of time with a new paper from Gavin Schmidt and Steven Sherwood. Gavin needs no introduction of course, and Sherwood is also well known to BH readers, having come to prominence when he attempted a rebuttal of the Lewis and Crok report on climate sensitivity, apparently without actually having read it.

The paper is a preprint that will eventually appear in the European Journal of the Philosophy of Science and can be downloaded here. It is a contribution to an ongoing debate in philosophy of science circles as to how computer simulations fit into the normal blueprint of science, with some claiming that they are something other than a hypothesis or an experiment.

I'm not sure whether this is a particularly productive discussion as regards the climate debate. If a computer simulation is to be policy-relevant its output must be capable of being an approximation to the real world, and must be validated to show that this is the case. If climate modellers want to make the case that their virtual worlds are neither hypothesis nor experiment, or to use them to address otherwise intractable questions, as Schmidt and Sherwood note happens, then that's fine so long as climate models remain firmly under lock and key in the ivory tower.

Unfortunately, Schmidt and Sherwood seem overconfident in GCMs:

...climate models, while imperfect, work well in many respects (that is to say, they provide useful skill over and above simpler methods for making predictions).

Bishop Hill

Bishop Hill  Dec 18, 2014

Dec 18, 2014  Climate: Models

Climate: Models  Climate: other

Climate: other  Ethics

Ethics Judith Curry quotes this sentence from Peter Lee's GWPF essay on climate change and ethics

Omitting the ‘doubts, the caveats, the ifs, ands and buts’ is not a morally neutral act; it is a subtle deception that calls scientific practice into disrepute.

I couldn't help but recall the reaction from climate scientists when I said it was "grossly misleading" of Keith Shine to omit any caveats when explaining the efficacy of GCMs to parliamentarians.

I stand by what I said.

Josh

Josh  Dec 16, 2014

Dec 16, 2014  Climate: McIntyre

Climate: McIntyre  Climate: Models

Climate: Models  Josh

Josh  Richard Betts

Richard Betts

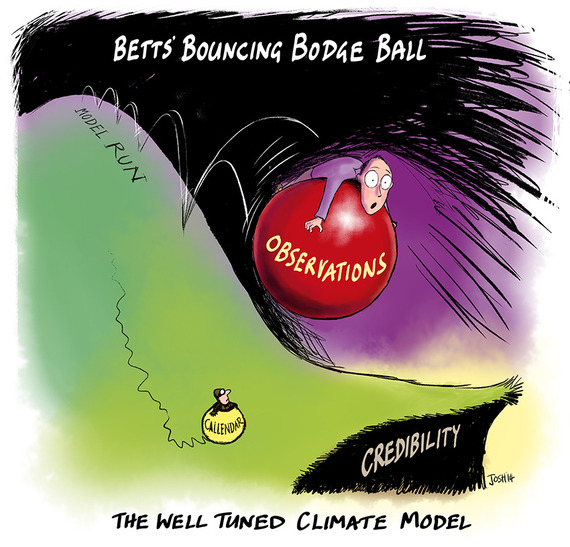

Talking of Climate Models, there is another great Climate Audit post titled "Unprecedented" Model Discrepancy where Richard Betts, once again, provides cartoon inspiration in the comments.

Talking of Climate Models, there is another great Climate Audit post titled "Unprecedented" Model Discrepancy where Richard Betts, once again, provides cartoon inspiration in the comments.

It’s a bit like watching a ball bouncing down a rocky hillside. You can predict some aspects of it behaviour but not others. You can predict it will generally go downhill, and if you see a big rock in it’s path you can be reasonably confident that it will hit it and bounce off, but you can’t predict the size and direction of all the little bounces in between.

Bishop Hill

Bishop Hill  Dec 16, 2014

Dec 16, 2014  Climate: Models

Climate: Models FiveThirtyEight has an amusing article about the competing explanations for the California drought. A bunch of NOAA scientists have reported that it's all down to natural variability, noting that they are pretty sure of their results:

This is the first study to show that a West Coast dry pattern could be triggered by warmer water anomalies in the tropical western Pacific. Seager said researchers feel “pretty confident” about the association because it shows up in all their models. (One objective of the study was to look for factors that could help predict future droughts.)

This seems to me to be a fairly hilarious example of the fallacy I lampooned in this posting a few months back.

Bishop Hill

Bishop Hill  Nov 24, 2014

Nov 24, 2014  Climate: Models

Climate: Models  Climate: Surface

Climate: Surface Via Nic Lewis and Frank Bosse comes a link to a page at the Berkeley Earth Surface Temperature project (BEST). Richard Muller and his team have compared their results to the output of a series of GCMs and the results are not exactly pretty, as one of the headlines explains

Many models still struggle with overall warming; none replicate regional warming well.